<<Back to DB Administration Main Page

What is Oracle Database Block

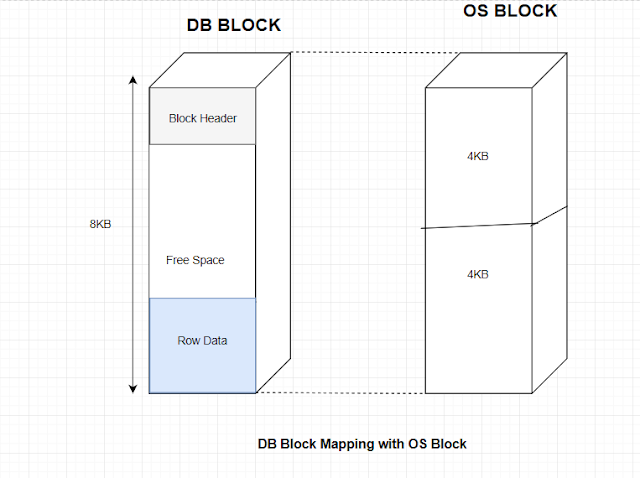

An oracle data block (DB Block) is a logical unit of data storage. And is the smallest unit of IO for oracle database. The data is physically stored in datafiles (disk files) made up of operating system blocks (OS Blocks). The size of OS blocks can be different than the Oracle DB Block. The OS handles IO in OS blocks while oracle always reads, write data in multiple of DB blocks. The size of oracle DB block can be defined using DB_BLOCK_SIZE init parameter (default 8k ) at the time of database creation and can not be changed later.You can configure multiple database block size in a database. To be specific you can define block size

for each tablespace (except SYSTEM, SYSAUX).

Oracle Data Block Structure

Now that you know what is oracle data block, Lets have a look how does it looks like. As you can see in the picture above the oracle DB block got a header (also known as block overhead) on top and data on bottom and the free space lies in between . Database uses the header information to track the data and free space in the block.

An Oracle block overhead consists of header, table directory and row directory and oracle needs this information to manage the block itself.

Block header => Block header holds general information about the block eg address of the block on disk, segment type, Transaction information etc.Table directory => This directory holds information like table metadata whose rows are stored in this block.

Row directory => In the form of row address, this holds the information to locate the rows in the block.

The block overhead totals between 84 to 107 bytes.

Like DB Block header every row in the block has its own header, we can call it row header. The row header of each row contains the information about the data stored in that particular row. Before going deep into Oracle DB Block lets have a look at Oracle ROW format as well.

Oracle Database ROW Format

Each row in a block is called row piece. Each row piece has a row header and column data as stated above.

Column Data => This hold the actual data in the row.

ROW Header => Row header contains information like

- Number of columns in the row.

- Information about chained and migrated rows.

- Cluster key for table cluster (if applicable) etc.

Oracle database usages ROWID to uniquely identify a row in the database. The row id is not physically stored in the database, but computed using the file id and block id.

To know more about oracle ROW ID READ HERE

That's said lets get back to our original topic for this Blog ie Oracle Database Block, And examine the facts little more in detail. And to do so I have to dump a block and walk you through the dumpfile.

Steps to dump an oracle DB block is provided HERE

Start dump data blocks tsn: 11 file#:64 minblk 132 maxblk 132

tsn: 11 => v$tablespace.TS#

file#:64 => v$datafile.FILE#

minblk & maxblk => dba_extents.BLOCK_ID (range of block ids dumped)

So from this line you can find the rage of blocks dumped (ie 132 to 132- just 1 block) from datafile#64 and Tablespace#11

file#:64 => v$datafile.FILE#

minblk & maxblk => dba_extents.BLOCK_ID (range of block ids dumped)

So from this line you can find the rage of blocks dumped (ie 132 to 132- just 1 block) from datafile#64 and Tablespace#11

Block dump from disk: <= self explanatory

buffer tsn: 11 rdba: 0x10000084 (64/132)

buffer tsn: 11 => Tablespace Number

rdba 0x10000084 (64/132) => Relative database Block Address.

RDBA is computed using datafile# and block_id and we can verify this as shown below. I got 268435588, decimal equivalent of rdba: 0x10000084. You can find the converter below to convert hex to decimal and vice versa

Lets compute the RDBA using file# (64) and block id (132)

SQL> select DBMS_UTILITY.MAKE_DATA_BLOCK_ADDRESS(64,132) from dual;

DBMS_UTILITY.MAKE_DATA_BLOCK_ADDRESS(64,132)

--------------------------------------------

268435588

--------------------------------------------

268435588

And we got the same number. We can even extract the file# and Block id from the decimal equivalent of RDBA

SQL> select DBMS_UTILITY.DATA_BLOCK_ADDRESS_FILE(268435588) from dual;

DBMS_UTILITY.DATA_BLOCK_ADDRESS_FILE(268435588)

-----------------------------------------------

64

-----------------------------------------------

64

1 row selected.

SQL> select DBMS_UTILITY.DATA_BLOCK_ADDRESS_BLOCK(268435588) from dual;

DBMS_UTILITY.DATA_BLOCK_ADDRESS_BLOCK(268435588)

------------------------------------------------

132

------------------------------------------------

132

1 row selected.

And therefore we concluded that the RDBA is calculated using file# and Block id

scn: 0x0.3f1c831 seq: 0x01 flg: 0x06 tail: 0xc8310601

scn: 0x0.3f1c831 => scn the block last modified at

seq: 0x01 => block sequence on this scn ( this tells, how many times a block is modified at this scn)

flg: 0x06=> some kind of flag

tail: 0xc8310601=> SCN + block type + SCN sequence number used to ensure the block consistency

seq: 0x01 => block sequence on this scn ( this tells, how many times a block is modified at this scn)

flg: 0x06=> some kind of flag

tail: 0xc8310601=> SCN + block type + SCN sequence number used to ensure the block consistency

frmt: 0x02 chkval: 0xf684 type: 0x06=trans datafrmt: 0x02 => Oracle7 formated or >=Oracle8 formated block

chkval: 0xf684 => block integrity checking and configured using db_block_checksum init parameter

type: 0x06=trans data => block types

chkval: 0xf684 => block integrity checking and configured using db_block_checksum init parameter

type: 0x06=trans data => block types

Object id on Block? Y => object id present on block.

seg/obj: 0x182ed csc: 0x00.3f1c577 itc: 2 flg: E typ: 1 - DATA

seg/obj: 0x182ed => segment/object number

SQL>select to_number('182ed', 'xxxxxxxxxx') from dual;

SQL> select OWNER,OBJECT_NAME,OBJECT_ID from dba_objects where OBJECT_ID='132877586';

seg/obj: 0x182ed => segment/object number

SQL>select to_number('182ed', 'xxxxxxxxxx') from dual;

SQL> select OWNER,OBJECT_NAME,OBJECT_ID from dba_objects where OBJECT_ID='132877586';

csc: 0x00.3f1c577 => Cleanout System Change number

itc: 2 => interested transaction list count. There are 2 transactions interested in this block. defaults to 1 and is maintained by storage parameters INITRANS and MAXTRANS.flg: E => Free list flag

itc: 2 => interested transaction list count. There are 2 transactions interested in this block. defaults to 1 and is maintained by storage parameters INITRANS and MAXTRANS.flg: E => Free list flag

Itl Xid Uba Flag Lck Scn/Fsc

0x01 0x0007.020.00007dd6 0x014004e0.2d71.0a --U- 1 fsc 0x0000.03f1c831

0x02 0x0000.000.00000000 0x00000000.0000.00 ---- 0 fsc 0x0000.00000000

Itl => The array index for the list, This value is used in the lock byte (lb:) for a row to show

which transaction has locked the row

Xid => The transaction id of a recent transaction that has modified this block. undo segment . undo slot . undo sequence number

Uba => The undo record address. Absolute block address . block sequence number . record within block

Flag=> flag identifying transaction state

----: active (or “never existed” if every field in the Xid is zero).

--U-: Upper bound commit (also set during “fast commit”).

C---: Committed and cleaned out (all associated lock bytes have been reset to zero).

-B--: May be relevant to the recursive transactions for index block splits.

which transaction has locked the row

Xid => The transaction id of a recent transaction that has modified this block. undo segment . undo slot . undo sequence number

Uba => The undo record address. Absolute block address . block sequence number . record within block

Flag=> flag identifying transaction state

----: active (or “never existed” if every field in the Xid is zero).

--U-: Upper bound commit (also set during “fast commit”).

C---: Committed and cleaned out (all associated lock bytes have been reset to zero).

-B--: May be relevant to the recursive transactions for index block splits.

Lck=> Number of rows locked by this transaction in this block.

Scn/Fsc => Depending on the Flag, the commit SCN or the number of bytes of free space that would become available if this transaction committed

Scn/Fsc => Depending on the Flag, the commit SCN or the number of bytes of free space that would become available if this transaction committed

tsiz: 0x1f98 => total available space in the block

hsiz: 0x14 => current header size

pbl: 0x7f4533ccf264

76543210

flag=--------

ntab=1 => number of tables in this block. Unless this block belongs to a cluster, the value is 1

nrow=1 => number of rows stored in this block.

frre=-1

fsbo=0x14 => Beginning Of Free Space

fseo=0x1f8c => End Of Free Space

avsp=0x1f78 => Available Space

tosp=0x1f78 => Total space

0xe:pti[0] nrow=1 offs=0 => There are 1 rows in table 0, starting at row 0

0x12:pri[0] offs=0x1f8c => Pointer to the row of table 0

hsiz: 0x14 => current header size

pbl: 0x7f4533ccf264

76543210

flag=--------

ntab=1 => number of tables in this block. Unless this block belongs to a cluster, the value is 1

nrow=1 => number of rows stored in this block.

frre=-1

fsbo=0x14 => Beginning Of Free Space

fseo=0x1f8c => End Of Free Space

avsp=0x1f78 => Available Space

tosp=0x1f78 => Total space

0xe:pti[0] nrow=1 offs=0 => There are 1 rows in table 0, starting at row 0

0x12:pri[0] offs=0x1f8c => Pointer to the row of table 0

block_row_dump:

tab 0, row 0, @0x1f8c => table 0 , row 0, starting byte position 0x1f8c

tl: 12 fb: --H-FL-- lb: 0x1 cc: 2

tl: 12 => total number of bytes for this row.

fb: --H-FL-- => row flag value

H=> head of the row

F=> First piece of the row

L=> Last piece of the row

means we have the complete row in the block.

lb: 0x1 => lock byte, coming from Itl

cc: 2 => column count

col 0: [ 2] c1 02 => column number and their values in Hexadecimal format

col 1: [ 5] 54 45 53 54 31 => column number and their values in Hexadecimal format

end_of_block_dump

tab 0, row 0, @0x1f8c => table 0 , row 0, starting byte position 0x1f8c

tl: 12 fb: --H-FL-- lb: 0x1 cc: 2

tl: 12 => total number of bytes for this row.

fb: --H-FL-- => row flag value

H=> head of the row

F=> First piece of the row

L=> Last piece of the row

means we have the complete row in the block.

lb: 0x1 => lock byte, coming from Itl

cc: 2 => column count

col 0: [ 2] c1 02 => column number and their values in Hexadecimal format

col 1: [ 5] 54 45 53 54 31 => column number and their values in Hexadecimal format

end_of_block_dump

Using Hex to ASCII converter you can read the content of table from this code.

Hex to ASCII Convertor

Hex to ASCII Convertor

NOTE:- There are too many things and its nearly impossible to reverse engineer the oracle DB block.

depending the type of block you dump and the status of the block at the moment you are dumping etc you will find more or less information in the dump file.

The overall objective of the post is to provide you an idea, about the oracle database block & row format and how oracle manages them

I hope you like the content. Please feel free to write your comment , feedback , suggestion in the comment section below.

Related Posts

Everything A Dba Need To Know About Oracle Data Block >>>>> Download Now

ReplyDelete>>>>> Download Full

Everything A Dba Need To Know About Oracle Data Block >>>>> Download LINK

>>>>> Download Now

Everything A Dba Need To Know About Oracle Data Block >>>>> Download Full

>>>>> Download LINK 6V